The Unspoken Bias of AI — and Why It Misses the Point Entirely

The quiet contradiction.

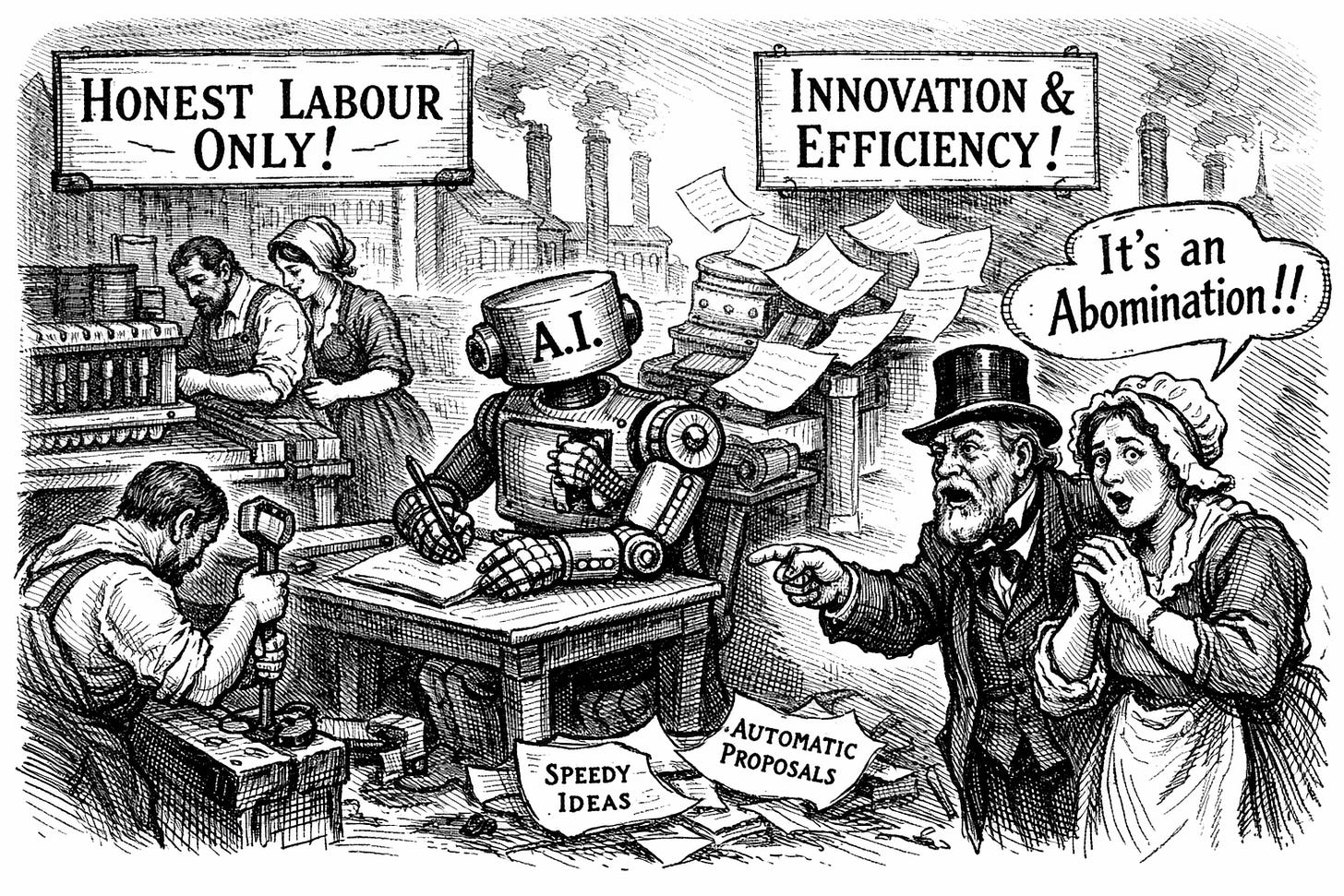

There is a quiet contradiction at the heart of how we talk about AI today.

On one hand, we treat AI as suspect—

a shortcut, a cheat, a dilution of human effort.

On the other hand, we quietly rely on it as if it were infrastructure.

What we have not done is reconcile those two realities.

And in that gap, bias has rushed in.

Not loud bias.

Not declared bias.

But a subtle, corrosive kind that devalues ideas before they are examined.

To understand why this is happening—and why it matters—we need to surface two concepts that remain mostly unspoken.

1. AI Is Not “Someone Else’s Thinking” — It Is Community Knowledge, Compressed

The core misconception is this:

AI is treated as an external author rather than a shared memory.

But modern AI systems are not individual minds.

They are statistical reflections of collective human output—language, structure, argument patterns, metaphors, explanations, histories.

In other words:

AI is not replacing thinking.

It is compressing community knowledge into a callable form.

When someone uses AI, they are not, or should not be, outsourcing authorship to a machine.

They are navigating a map built from the accumulated traces of human reasoning.

That is not fundamentally different from:

Consulting books

Searching the web

Learning from mentors

Absorbing cultural norms of argument and expression

We accept those forms of borrowed cognition without moral panic because they are familiar.

AI simply removes friction.

And friction, historically, has been mistaken for virtue.

2. The Real Problem Isn’t AI — It’s Bias, Which Always Was

Here is the uncomfortable truth:

Bias is the root issue in nearly every “AI problem” we talk about today.

Not computation.

Not capability.

Not even scale.

Bias.

We project it onto:

Training data

Outputs

Usage

People suspected of using the tool

The irony is hard to miss.

We condemn AI for reflecting human bias

while simultaneously introducing new bias against humans who use it.

This is not a technical failure.

It is a social one.

And it follows a familiar pattern:

New tool appears

Power dynamics feel threatened

Moral narratives emerge

Suspicion replaces evaluation

The bias shifts shape, but the mechanism stays the same.

How These Two Forces Collide

When these ideas intersect, something damaging happens.

If AI is treated as external and morally suspect

instead of collective and contextual,

then anyone who uses it becomes suspect by association.

Their ideas are quietly discounted:

Less original

Less earned

Less authentic

Not because the ideas fail—

but because the process offends an unspoken norm.

This is not epistemology.

It is gatekeeping.

The Hidden Cost: We Devalue Insight Based on Origin, Not Impact

Once bias takes hold, evaluation shifts:

Instead of asking:

Is this accurate?

Is this useful?

Does this move understanding forward?

We ask:

How was this produced?

How fast?

With what tools?

This is backwards.

Ideas have never been valuable because of their purity.

They are valuable because of their effect.

Clarity is not a moral failure.

Efficiency is not intellectual fraud.

Leverage is not laziness.

Yet bias trains us to believe otherwise.

AI Didn’t Introduce This Bias — It Exposed It

The most important reframing is this:

AI did not create our discomfort with shared intelligence.

It revealed it.

We are uneasy not because AI thinks—

but because it makes visible how much thinking was always collective.

No one writes alone.

No one reasons in isolation.

No one innovates without standing on accumulated context.

AI simply makes that scaffolding explicit.

And that threatens old stories about individual genius, effort-as-suffering, and legitimacy-through-friction.

Where This Leaves Us

If we continue down the current path:

People will hide their tools

Clarity will be penalized

Performative imperfection will be rewarded

Bias will quietly shape whose ideas are heard

If we change the frame:

AI becomes communal infrastructure

Bias becomes the primary thing we interrogate

Ideas regain primacy over process

Accountability stays human, where it belongs

The future does not hinge on whether AI is used.

It hinges on whether we can confront our reflex to judge how knowledge is accessed instead of what it enables.

The Line Worth Drawing

AI is a mirror, not a mind.

A library, not an author.

A multiplier, not a replacement.

The real danger is not that machines will think for us.

It is that bias will think instead of us—quietly, comfortably, and without challenge.

And unlike AI, bias does not improve unless we force it into the light.