INTENT BEFORE AI

A Manifesto for Deploying AI Without Losing Control

AI is not dangerous because it’s “intelligent”.

AI is dangerous because it’s non-deterministic.

Non-determinism is a feature for creativity and discovery,

but a liability for security, compliance, and governance.

If AI is allowed to act without boundaries,

you don’t have innovation » you have unbounded risk.

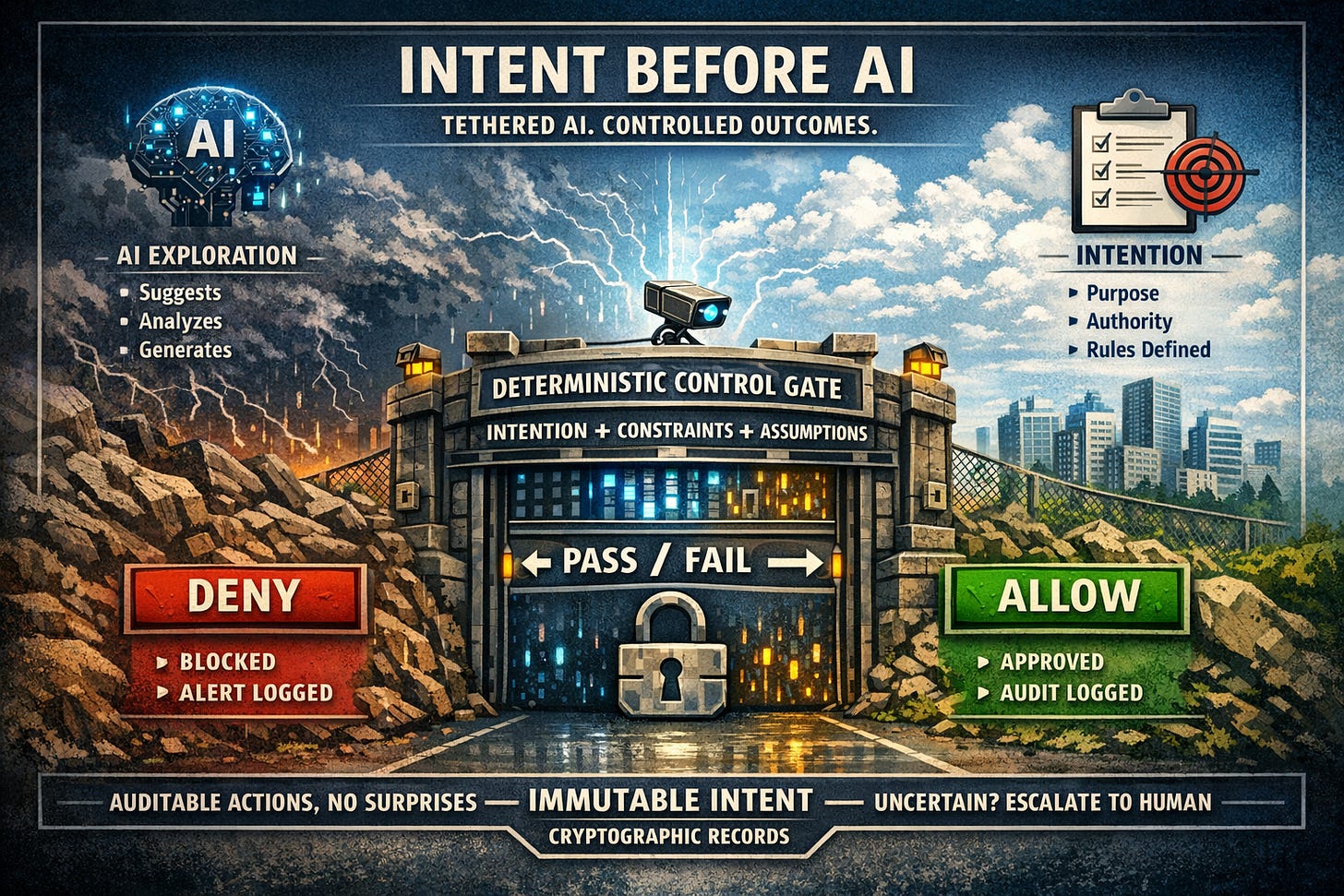

The Core Principle

AI must be tethered to the deterministic bedrock of Intention.

AI explores possibilities

Intention defines what is allowed to happen

Determinism enforces the boundary between the two

This is not about trusting AI.

It’s about deciding, in advance, what outcomes are even admissible.

What We Mean by Intention

Intention is not:

A policy document

A guideline

A best practice

A prompt

Intention is:

Explicit

Versioned

Forward-looking

Enforceable

Immutable once active

Intention declares:

Purpose — why this system exists

Authority — what it may and may not do

Scope — what data, repos, and actions are in play

Assumptions — what must be true or execution halts

Time bounds — when authority starts, changes, or expires

If intention is not declared,

AI has already exceeded its authority.

The Role of AI (Strictly Limited)

AI is a powerful coprocessor, not a decision-maker.

AI may:

Observe

Analyze

Explain

Suggest

Draft non-authoritative outputs

AI may never:

Decide

Execute

Expand its own authority

Bypass a denial

Fill in missing assumptions

Act optimistically under uncertainty

AI does not get the benefit of the doubt.

Uncertainty results in restraint.

Determinism at the Boundary

Every action, output, or emission must pass a deterministic gate:

Intention ⊗ Constraints ⊗ Assumptions → Outcome

Outcomes are finite and boring — by design:

ALLOW

WITHHOLD

DENY

HALT

If a system cannot deterministically explain why something happened —

or why it didn’t — it is not governed.

Why This Matters

This approach:

Scales AI without scaling liability

Enables speed without sacrificing control

Makes AI deployable in regulated, adversarial, and high-value environments

Produces audit-grade evidence instead of post-incident narratives

Most systems try to train AI to behave.

We do something harder and more reliable:

We decide what behavior is allowed to exist at all.

The Bottom Line

AI is the engine.

Intention is the guardrail.

Determinism is the contract.

Without all three,

you don’t have a system —

you have an eventual incident.